The ability to sift through and analyze vast amounts of customer feedback across various formats and sources is not just advantageous for businesses—it's essential. Companies are bombarded with data from product reviews, support chats, social media interactions, and survey responses, presenting a complex challenge: how to distill this data into actionable insights. While Large Language Models (LLMs) like OpenAI's ChatGPT offer a promising solution for tackling this task, their broad application often falls short of addressing the nuanced demands of data analysis. This is precisely why we have spent years designing an app to overcome the limitations of general purpose LLMs to deliver refined, reliable, and insightful analysis.

Clustering Algorithms vs. General Purpose Large Language Models (LLMs)

Clustering algorithms are a fundamental tool in data analysis for grouping together data points based on their similarities. They achieve this by modeling the relationship between data points as a measure of distance within a multi-dimensional space. Each dimension represents a feature or attribute of the data, allowing these algorithms to consider multiple aspects simultaneously when determining similarity. The closer two data points are in this space, the more similar they are considered to be. This approach not only makes it possible to identify natural groupings within the data but also to visualize these relationships, offering an interpretable map of how different data points relate to one another. Such visualizations can be invaluable for understanding complex datasets, revealing patterns, and relationships that might not be immediately obvious.

In contrast, Large Language Models (LLMs) like ChatGPT, when tasked with agglomerating or summarizing data, do not operate on the principle of measuring distances between data points. Instead, they generate summaries based on the textual content they process, guided by the patterns learned during their training. While LLMs can identify themes or topics within the data and generate coherent summaries, they do not inherently model the multi-dimensional relationships between data points in a way that is directly interpretable or visualizable. The output of LLM agglomerations is textual and lacks the explicit, measurable structure that clustering algorithms provide through their distance-based models.

This fundamental difference highlights the unique value of clustering algorithms in exploratory data analysis and situations where understanding the structure and relationships within the data is crucial. For example, in customer segmentation, clustering algorithms can visually map out customer groups based on purchasing behavior, demographics, and other relevant features, offering clear insights into market structure that can inform targeted marketing strategies. LLMs, while powerful in processing and generating text, cannot provide the same level of interpretability and visualization of data relationships.

We’ve designed Viable’s platform using a sophisticated combination of clustering algorithms, proprietary AI models, and GPT-4 to deliver precise, reliable, and consistent insights that overcome the limitations of data analysis using LLMs alone. Here’s how:

Boost customer satisfaction with precise insights

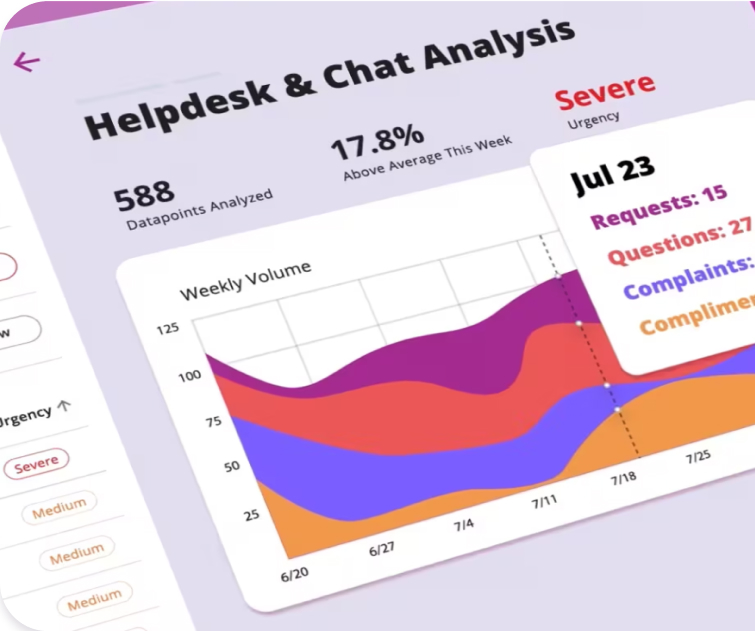

Surface the most urgent topics by telling our AI what matters to you.

1. Coping with Noise

One of the primary challenges with LLMs is their struggle to filter out irrelevant data ("noise") in feedback. Unlike clustering algorithms used by Viable, which model density and thus naturally separate relevant data from any low-density outliers, LLMs lack an inherent mechanism for distinguishing valuable insights related to your product from irrelevant information such as discussions about the weather.

In a scenario where a new video game product launch garners mixed feedback, an LLM might weigh all responses in a Reddit thread equally, including off-topic rants. Viable, however, distinguishes between pertinent product feedback and irrelevant noise, ensuring that only meaningful insights are analyzed.

2. Context Window Limitations

LLMs have a structural limitation known as "context window limits." This refers to the maximum amount of text (measured in tokens, where a token can be a word or part of a word) that the model can consider in a single instance of processing. For instance, ChatGPT Enterprise and ChatGPT Plus have context window limits of 128K and 32K respectively, which means they can only "see" or analyze up to 128,000 or 32,000 tokens of input at any given time.

When analyzing long texts or data that span across multiple segments exceeding this limit, the text must be divided into smaller portions that fit within the model's context window. Each segment is processed independently as context window 1, 2, 3, and so on. However, the challenge arises in maintaining coherence and continuity across these segmented analyses. Since each segment is analyzed in isolation, understanding or insights derived from one segment may not automatically inform the analysis of subsequent segments.

To address this, a reconciliation step is necessary. This involves integrating the insights or understanding derived from each independent segment analysis to form a cohesive interpretation of the entire text or dataset. The reconciliation process requires additional logic and methods to ensure that the context and insights from one window are correctly linked with those from another. For instance, if the first segment of a customer feedback text describes an issue with a product, and the subsequent segment offers specific details about the issue, the reconciliation step must accurately connect these details to provide a comprehensive understanding of the feedback.

This reconciliation step is not straightforward and introduces complexity into the analysis process. It demands sophisticated handling to ensure that the overall context is not lost and that the analysis accurately reflects the full scope of the input data. This is especially crucial for tasks like analyzing customer feedback, where understanding the full context and nuances of the feedback is essential for deriving actionable insights. Without effective reconciliation, important details might be overlooked or misinterpreted, potentially leading to incomplete or inaccurate conclusions.

Additionally, most LLMs are constrained by output space limits, often not exceeding 2,000 tokens (approximately 1,500-2,000 words). This limitation becomes a significant challenge when tasks require generating long-form content or detailed analysis that surpasses this token limit.

3. Ensuring Consistent Interpretation

Direct input of raw feedback into zero-shot LLMs like ChatGPT often leads to inconsistent categorization due to:

- Inconsistent Extraction: LLMs may not accurately differentiate between compliments, complaints, questions, and requests due to their generalized processing approach.

- Abstraction and Splitting Issues: Feedback containing multiple elements might not be coherently split into distinct CCQR aspects, leading to a loss of nuanced insights.

- Variability Across Inferences: LLM analysis can vary with each inference and context window, resulting in inconsistent interpretations of similar feedback.

Viable transforms raw customer feedback into categorized insights using the CCQR framework (Compliment, Complaint, Question, Request), ensuring a structured and actionable analysis. This process, called distillation, assigns each piece of feedback to one of these categories for a consistent interpretation across the dataset.

Consider this feedback: "Love the design of the new model, but why is it taking so long to ship? Also, can you offer it in blue?"

- Viable's Approach: Distillation categorizes this into a compliment about the design, a question about shipping, and a request for more color options, enabling targeted insights and actions.

- LLM Analysis: An LLM might provide a general summary that captures sentiment and inquiries but lacks the structured breakdown into CCQR aspects for actionable analysis.

This framework through distillation ensures feedback is analyzed in a structured, consistent manner, addressing the limitations often encountered with LLMs. This approach not only enhances the reliability of insights drawn from customer feedback but also ensures they are directly actionable.

4. The Challenge of Agglomeration

LLMs struggle with reliably producing exhaustive and coherent agglomerations, and their behavior in this regard is difficult to adjust. We have built Viable to offer tunable clustering algorithms that not only ensure comprehensive data aggregation, but also allow for adjustments based on specific analysis needs.

Challenges with LLM Agglomeration

- Non-exhaustive Outputs: When an LLM is tasked with agglomerating data points—essentially, grouping together similar pieces of information to form a coherent summary or analysis—it often fails to be exhaustive. This means it might not capture or include all relevant data points in the aggregation process, leading to incomplete analyses.

- Inconsistency Across Inferences: The agglomeration behavior of LLMs can vary significantly from one inference to another. An "inference" in this context refers to the process of the model generating output based on a given input. This variability can be influenced by different factors, such as the specific wording of the input, the model's current "state" based on previous interactions (in interactive or session-based models), or even updates to the model itself.

- Variability with Temperature and Input: The term "temp" refers to temperature settings in generative models that control randomness in the output. Different temperature settings can lead to different agglomerations for the same set of data. Similarly, slight variations in input can also lead to drastically different agglomerations.

- Lack of Interpretability and Determinism: Because of the aforementioned variability and the general opaqueness of how LLMs process data, the resulting agglomerations can be difficult to interpret or predict. This contrasts with more deterministic methods, where the output is more directly traceable to the input and the processing rules applied.

Clustering Algorithms as a Solution

In contrast, clustering algorithms, which are designed specifically for the task of grouping similar data points, offer a more reliable and interpretable approach. They are deterministic, meaning the same input will consistently produce the same output, and they are designed to be exhaustive, ensuring that all relevant data points are considered and appropriately grouped. Clustering algorithms work based on clear, definable metrics for similarity, which allows for their outputs to be interpretable—understanding why a particular data point was included in a cluster is straightforward based on the defined metrics.

Example: Consider a set of customer feedback comments about a product. If tasked with agglomerating comments that express similar sentiments, an LLM might group them differently depending on slight variations in how the sentiment is expressed or based on the model's temperature setting, potentially omitting relevant comments or including unrelated ones. A clustering algorithm, however, would consistently group these comments based on a defined metric of similarity (e.g., keyword presence, sentiment score), ensuring a comprehensive and understandable aggregation.

While LLMs offer broad capabilities for generating human-like text and can perform a range of tasks, their application to specific data analysis tasks like agglomeration presents challenges in terms of reliability, consistency, and interpretability. Clustering algorithms are better suited for these tasks due to their deterministic nature and the clarity with which they operate.

5. Tuning Data Analysis to be Flexible

LLMs are designed to generate text based on learned patterns, making their agglomeration behavior — the process of summarizing or grouping similar data — hard to adjust without extensive retraining. This lack of flexibility limits their practicality for specific data grouping tasks. In contrast, clustering algorithms are inherently tunable, allowing users to easily adjust how data is grouped by changing parameters or the algorithm's configuration. This flexibility makes them ideal for tailored data analysis tasks.

When analyzing customer feedback about a new product feature, adjusting an LLM to focus specifically on feature-related comments is complex and resource-intensive. In contrast, a clustering algorithm can be quickly tuned to emphasize keywords related to the feature, making it a more efficient choice for this task. This tunability enables precise adjustments to the data grouping process, catering to specific analysis needs without the need for extensive computational resources.

6. Eliminating Hallucinations

Zero-shot LLMs like ChatGPT are trained on vast datasets to predict the next token in a sequence, making them capable of generating new text based on the patterns they've learned. While this ability is valuable for creating human-like responses or filling in gaps in information, it also introduces the risk of generating content that reflects the model's "predictions" rather than the actual data. In the context of summarizing data, this means an LLM might infer connections or themes that aren't supported by the data, effectively "hallucinating" cluster information.

If you were analyzing customer reviews to identify common themes, an LLM might "hallucinate" a theme of dissatisfaction related to customer service, even if most reviews focus on product features, because it "predicts" this theme based on learned associations. A clustering algorithm, however, would only create clusters based on the actual content of the reviews, such as grouping together all mentions of a specific feature, without introducing unrelated themes.

This fundamental difference underscores why clustering algorithms are preferred for tasks requiring precise and faithful representation of data, as they mitigate the risk of introducing inaccuracies or fabrications into the analysis.

7. Handling Sensitive Content

Reinforcement Learning from Human Feedback (RLHF) fine-tunes Large Language Models (LLMs) to align with ethical and social standards by modifying responses based on human feedback to help avoid generating inappropriate content. However, this can lead to unpredictable behavior when handling sensitive content for several reasons:

- Subjectivity: Sensitivity is subjective, and RLHF, while attempting to generalize from human feedback, might not capture the full spectrum of what is considered sensitive across different users.

- Overcorrection: To avoid inappropriate content, the model might overly suppress or alter benign responses, leading to unpredictability in its handling of sensitive topics.

- Feedback Variability: The variability and bias in human feedback used for RLHF can cause the model to respond inconsistently to sensitive queries, reflecting the bias of the feedback rather than a balanced approach.

A social media manager looking to understand public sentiment expects a balanced summary, highlighting both positive feedback and criticism. However, due to RLHF's influence, the model might overly censor or downplay inflammatory responses, focusing instead on neutral or positive comments. This behavior stems from its training to avoid generating or amplifying sensitive or offensive content. As a result, the summary provided by the model might fail to accurately reflect the intensity of the negative sentiment or the specific issues causing discontent among the public.

8. Instability of Zero-Shot Behavior

Zero-shot behavior refers to the ability of a foundation model, like a Large Language Model (LLM), to perform tasks it wasn't explicitly trained to do, using only the instructions given at the time of the query, without any additional fine-tuning or training examples. This capability is a hallmark of the flexibility and general applicability of such models. However, this behavior introduces a level of unpredictability, especially as the model undergoes iterations or updates.

As foundation models are iterated—either through retraining to improve their capabilities or to expand their knowledge base—their behavior on tasks where they have no specific training (zero-shot tasks) can change. This is because the model's understanding and internal representations are updated, potentially altering how it interprets instructions and processes data.

The meta-layer of unpredictability arises from the dynamic nature of these models. Even if a model has demonstrated well-profiled behavior—meaning it has been observed to perform reliably under certain temperatures (a parameter influencing randomness in responses), with specific types of input data, or in certain formats—this performance can shift unexpectedly after an update. Such updates might optimize the model for certain types of tasks or improve its overall accuracy, but they can also inadvertently affect its zero-shot capabilities in unforeseen ways.

Example: Imagine a foundation model that has been used reliably to generate summaries of financial reports in a zero-shot manner. It has been profiled to perform this task with a high degree of accuracy and consistency, given specific types of input data. However, after an update designed to improve its natural language understanding capabilities, its behavior might change. Suddenly, it could start emphasizing different aspects of the reports or interpreting the task in a new way, leading to summaries that focus on different information than before. This change happens because the update altered the model's internal representations or priorities, demonstrating the inherent unpredictability of zero-shot behavior in evolving models.

This unpredictability underscores the challenge of relying on zero-shot capabilities for critical tasks without continuous monitoring and adaptation to the model's evolving behavior.

9. Fine-Tuning Models for Optimal Analysis

The fine-tuning process specifically trains models beyond their original configuration to perform certain tasks more effectively. Our models are specifically trained to analyze complex datasets in a number of ways.

Cluster Quality & Agglomeration

The first is adjusting them to focus on the task of understanding unstructured data by evaluating how well the data has been grouped. This involves measuring the coherence within clusters (how similar the items in each cluster are to each other) and the separation between clusters (how distinct each cluster is from the others). The goal is to ensure that items within a cluster are as similar as possible while being clearly distinguishable from items in other clusters.The models not only group the data but do so in the most effective way possible, considering factors like minimizing the number of clusters while maximizing their relevance and distinctiveness.

Initially, a dataset of customer feedback is typically unorganized. By applying fine-tuned models, we can cluster these comments into meaningful groups, such as "product quality," "customer service," and "delivery issues." The fine-tuned models help determine the optimal number of clusters and assign each comment to the most relevant cluster. They also continuously assess the clustering quality, making adjustments as needed to ensure that each group accurately represents a distinct category of feedback. This process allows for a more structured analysis of the feedback, facilitating targeted responses and improvements.

Diversity of Data

Our fine-tuned analysis models utilize a diverse and normalized analysis template, robustly processing a wide range of text data formats and structures, ensuring consistent, reliable insights from both structured and messy datasets. The models excel at navigating through both quantitative and qualitative text data, from messy Zoom recordings involving multiple participants to direct, structured survey responses. The models standardize disparate data types into a consistent format for analysis, allowing for a seamless comparison and synthesis of insights across various sources. This approach guarantees that valuable information is captured and utilized, providing a well-rounded understanding of customer sentiments and experiences.

Consider a dataset containing recorded customer interviews for a range of products, with each interview varying in length, topic, sentiment, and language quality. Some might be concise and to the point, while others are verbose and off-topic. There could also be missing information, typos, or slang. The fine-tuned analysis models apply their "diverse and normalized template" to this dataset, standardizing the reviews to a format that can be uniformly analyzed, perhaps by extracting key phrases, categorizing sentiment, and ignoring irrelevant or redundant information. Despite the "messiness" of the initial data, these models can accurately identify trends, such as the most praised or criticized features of the products, providing valuable insights that can guide product development and marketing strategies.

10. Analyzing Metadata Insights

Large Language Models like ChatGPT are adept at summarizing data based on its content. However, these models face challenges when trying to adjust their analysis to consider secondary traits, such as metadata. Metadata encompasses additional information about the data, such as the department, role, and duration of an employee in a survey, or the region, operating software, and numerical star rating of a respondent in an app store review.

Given this limitation, LLMs struggle to tailor their analysis to highlight insights based on these metadata characteristics. For example, in analyzing an employee survey about a new HR policy, if a business wants to understand how the policy impacts different subgroups within the organization—like those in specific departments or with varying lengths of service—LLMs wouldn't easily adapt their summarization to spotlight differences based on these subgroups. The primary analysis would remain focused on the textual feedback, without delving into the nuanced impacts revealed by examining the metadata.

Ready to test and compare the analysis with your own data? Start your free trial.

%20(10).png)

%20(9).png)

%20(8).png)