This is a guest blog post by Sebastian Barbera, Viable’s Head of AI. Sebastian is an autodidact, having taught himself machine learning engineering and AI. He is an inventor with multiple software and hardware patents, and helped build Viable’s AI from Simple Unenriched Summarization to Multidimensional Analysis in a span of 17 months.

There’s an important truth that everyone needs to know as we watch AI become more and more ubiquitous: a machine learning model is not an app. Single models, regardless of their size, sophistication or training, have certain harsh limitations. Single models can’t outperform groups of models that have been augmented with code and specialized to solve a specific task, that is to say, an app. You can’t just strap wings on a jet engine and call it a safe, affordable consumer airliner. Likewise, you can't just feed your customer data to a naked LLM and expect it to provide reliable, scalable analysis. You need an app.

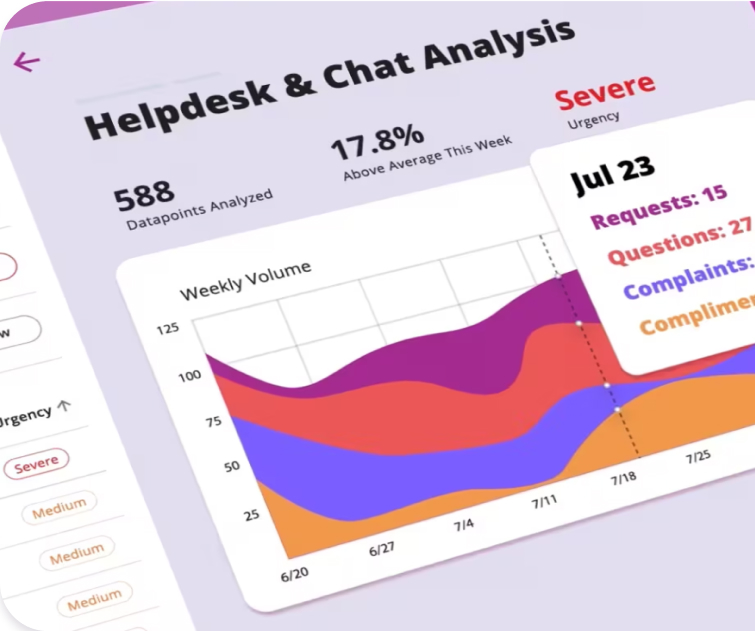

Boost customer satisfaction with precise insights

Surface the most urgent topics by telling our AI what matters to you.

Our tool, AskViable is an app built on a suite of models working together with dedicated infrastructure and algorithms. It is engineered from the ground up to be a scalable and reliable way to extract analysis from customer data. ChatGPT, GPT4, Claude, Gemini are just naked models. In this blog series I’m going to walk you through numerous reasons why using naked LLMs alone to process customer data is not only inefficient, but outright irresponsible.

At Viable we think about analysis as a sequence of tasks that build on top of one another. We call this “The Great Pyramid of Compute.” The lowest level of the pyramid is the processing of individual datapoints, and the very pinnacle of the pyramid is the high-level analysis of multiple entire datasets. At every level of this pyramid, AskViable outperforms a naked LLM.

At the very lowest foundational level of the pyramid is the feedback datapoint itself.

Feedback data can come in many shapes and sizes. There’s NPS responses, usually short and to the point. There are app reviews, which are small, but longer than the typical NPS response, often addressing several points. There’s lengthy chat and social media data that can be hundreds of words long and be mostly fluff, but have several valuable insights buried in it. Then at the long end are call transcripts that can be thousands of words long, with only the thinnest throughline of diffuse actionable feedback spread across paragraphs. Coping with this variety of data types is a challenge and naked LLMs will perform very differently depending on the nature of the data.

Viable has seen hundreds of millions of datapoints and on average only 63% of customer data is actionable with the rest being noise. Different data types can have dramatically different noise characteristics. Surveys are 90%+ signal, meaning almost everything is useful feedback. Gong transcripts on the other hand, can have as low as 25% signal, with most of the data being meaningless chatter. If you drop 100 random datapoints into the context window of a naked LLM, it will have no sense of what’s useful and what’s trash. You’ll be left with a contaminated analysis that could do more harm than good.

Below are some datapoints that show a gradient of signal characteristics.

The first is a small chunk of a Gong transcript. It demonstrates that even very long texts of customer feedback can still be completely useless, containing no actionable insights, and should thus be treated as noise.

(customer): We have a new partnership, well and existing an enhancement to an existing integration, but a larger partnership announcement coming up in the next couple of weeks that we think is going to be big new business driver for us. So want to get, you know, a lot of outreach like with breads team, but also up on the website and everything going. So that's the quick win. We have, I think three less than three week so. Awesome. So like these templates that come with like we can, if we don't want to use those pages, we can just pass them and then go with our own screenshots. Of course. Okay. Thank you. Okay, cool. Thank you. Correct. I was gonna say, I think full you're are you copied on the emails because I probably gonna start playing around and just start thinking of questions as I go? Perfect. Thank you. Maybe from like a very high level, I. Probably not enough but what my thought process was to show like the experience one so like kind of make it obvious how easy it is to set one set up the integration. So what I was gonna do is show like our integration page and then like actually like click through connecting the two and that's that. And then from there go into a dashboard. So probably like our demo environment and then show like what that unlocks in there. And then that's kind of all I had gotten through at that point. But it would be them or like as I load tour from like what you're in walking by connecting the two.

What you see is just a bunch of contextual chatter with no specific products or features mentioned, no clear sentiments, no clear intents. This block would be what we call “verbose noise”. This is not the kind of noise that can be easily blocked. Automated messages, representative messages, and other forms of conventional “spam” are the kind of noise that can be blocked by customers before integrating with us. But verbose noise looks like it might be useful, since it is from the customer, it is in fact meaningless. To parse this kind of data, we train specialized models on tens of thousands of manually curated examples of signal and noise. The result is that Viable has one-of-a-kind proprietary models that know what noise looks like in all its forms, without customers having to tell us. Naked LLMs have no concept of this, and are notoriously bad at parsing noise, even when given dozens of few-shot examples in a prompt.

So really there are three kinds of noise, forming their own little hierarchy. There’s spam. There’s verbose noise, which are long datapoints that seem useful but really aren’t. And there is also a low-aspect signal. What does that mean? You can have a datapoint that’s hundreds of words but only a single sentence of it is useful as feedback. Our noise detection model will pass such a datapoint as signal, because it can see that there is signal buried in it, but that datapoint is still problematic. Below is a good example:

Ok I'll agree with you on the bargain phone portion. But to use your own argument against you, I have to believe that more than 1% of Peloton users are android users. Since you referred to it as \"being rich enough\" to afford one, one would have to use that same line of thinking to determine that these rich peloton users are not operating on bargain android based phones. Why not create an app that targets the flagship android OS phones?\n\nI'm not being delusional, but apple OS is not the only premium OS. It's limited to a small amount of equipment that runs it, which makes it simpler for programmers to create apps. The insane amount of android run phones is primarily what causes the difficulty, which you mentioned in a prior comment. I don't think the OS is the problem.",

Of this 143 word block of feedback the only part that is actionable is “Why not create an app that targets the flagship android OS phones?” Only that rhetorical question, which implies a request for a peloton app for android, can be seen to have a clear sentiment and intent. The rest of the datapoint is chatter, responding to other commenters on reddit and arguing the core point. When processing a vast dataset into a high level report, parsing these concise “aspects” out of the larger noisy blob of text is crucial. So before performing any kind of analysis or quantification, we parse out the important parts of signal datapoints into what we call CCQR aspects. CCQR stands for Complaint, Compliment, Question, Request. Below you can see a very rich, signal-dense datapoint that actually has all four CCQR aspects present in it.

I find scenic rides a great way to work out against my own PB and pretend like it isn't winter outside. But some aspects need work: for those of us who spend time on actual bikes, the experience is pretty strange, but seems like it could be easily improved.\n\n1) As others have stated, why can't the video just be single 20 or 30 or whatever minute single video?\n\n2) City rides where you are in huge crowds of pedestrians feels very weird.\n\n3) Riding a bike on what is clearly a hiking trail, often with stairs, also is strange.\n\nDoes anyone know how these courses are produced? Would they accept submissions from outside?

You can see this 118 word comment unpacks into four aspects, totalling 110 words.

1. (complaint) Peloton user complains that city riding videos where they ride through pedestrians felt strange. They also find riding videos on hiking paths instead of cycling paths to be strange, suggesting the user would like riding videos to be more realistic to a normal cycling experience.

2. (compliment) Peloton user compliments the scenic riding videos as a great way to work out and compete against their own personal best performance (PB).

3. (question) Peloton user asks if user-generated cycling videos can be submitted to Peloton and used as ride footage.

4. (request) Peloton user suggests that there should be ride videos that are single continuous clips in one location, instead of multiple clips through different locations.

This process of parsing out and classifying the most useful components of a datapoint we refer to as “aspect distillation.” Again, this is done by huge specialized models we trained on super high quality human curated data. Naked LLMs will be able to detect some of these aspects, but they are not trained to understand the subtle dividing line between what is, and what isn’t a useful aspect, and what is truly a complaint vs what is a request or question. Viable has imparted real human product expertise into its models. We have a firm definition of what’s what. Where with a naked LLM you'll see that these definitions shift inference to inference (run to run) and dataset to dataset. There are no firm definitions, because the models are trained on a super diffuse body of generalized data, and have no validation steps to ensure they are staying on task.

At the core of what separates a naked LLM from specialized models in an app, is task design. These naked LLMs are known as “foundation models” for a reason. They have a baseline of intelligence that is useful, but not rigorous or specialized. Their understanding of any specific task is diffuse, amorphous, built to be flexible, not reliable. When designing a system to attack something like customer feedback analysis we experiment with dozens of different ways to do it, we worked with dozens of different customer companies, and we learned from dozens of failures. From this body of hard work and experience we identified a series of precise task designs that when chained together and validated and run through automated quality control reliably produce truthy reporting for our customers no matter how diverse their data.

Stay tuned for Part 2 of this series, where we'll ascend our pyramid of compute and demonstrate how as tasks become more demanding, specialization and fine tuning become even more essential for success.

%20(10).png)

%20(9).png)

%20(8).png)