In the realm of technology, new advances frequently make us question whether they will replace existing solutions. OpenAI's new Code Interpreter plug-in for ChatGPT, a model that deciphers and executes code, prompts a similar question. Will it mark the end of the commonly used AI data pipelines? Or, are these technologies designed to serve different purposes, each with its unique role and advantages?

Unpacking the Code Interpreter

OpenAI's Code Interpreter is a stellar example of the advances being made in AI technology. For those unfamiliar, a code interpreter is an AI that understands and executes code. Think of it as your personal assistant who can turn your code instructions into actions.

Suppose you need to sort a list of names alphabetically. Using Python, a popular language for AI development, you could write a simple code: names.sort(). Feed this into a code interpreter, and voila! Your list is sorted. The Code Interpreter takes your commands, understands them, and carries them out.

But it's important to remember that a code interpreter, while impressive, operates reactively. It's a 'call-and-response' system. You give it a command, it processes that command, and it returns the output. It's an extraordinary tool, but it's not autonomous. It needs a programmer to direct its actions.

Demystifying Data Pipelines

In contrast, data pipelines play a different, more proactive role. A data pipeline is an automated system that continually processes data. It is designed to extract data from multiple sources, transform it into a usable format, and then load it into a destination for storage or further analysis.

Picture a busy warehouse. It's full of packages arriving from all over, being sorted, labeled, and sent out to their destinations. That's essentially what a data pipeline does with data.

One common application of data pipelines is in Business Intelligence (BI). Companies continually generate vast amounts of data - sales figures, customer data, social media interactions, etc. Data pipelines can collate and process this information, providing companies with real-time insights and helping them make informed business decisions.

Unlike code interpreters, data pipelines don't wait for commands. They are designed to keep working, keep processing, whether we ask for it or not. They are, in essence, autonomous systems.

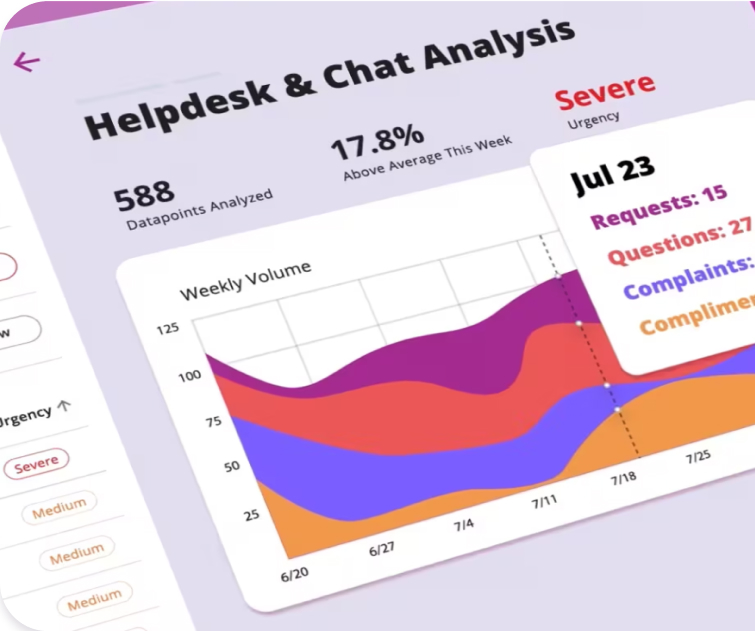

Boost customer satisfaction with precise insights

Surface the most urgent topics by telling our AI what matters to you.

Usability and Scope

Usability is another crucial distinction between these tools. While a code interpreter is a powerful tool, its power lies primarily in the hands of those who know how to code. It's a bit like a high-performance sports car. In the right hands, it's incredible. But if you don't know how to drive it, it's just a very expensive sculpture.

On the other hand, data pipelines can be designed to be user-friendly for non-programmers. BI tools, for example, use data pipelines to present complex data in an easily digestible format, offering dashboards and visualizations that anyone can understand, regardless of their coding proficiency.

Handling Large Amounts of Data

Let's talk about data volume. Data pipelines are often built to handle vast amounts of data. They are optimized to avoid processing bottlenecks, ensuring that data flows smoothly and efficiently from source to destination.

In contrast, while a code interpreter can handle large datasets, it isn't inherently designed for such heavy-duty work. It's like using a sports car for cargo transport. It's not that it can't do it, but it's not what it was designed for.

Competition or Complementarity?

So, will OpenAI's code interpreter model for ChatGPT replace AI data pipelines? Based on the distinctions we've discussed, it seems unlikely. Code interpreters and data pipelines each have unique roles and advantages. More importantly, they address different needs.

Code interpreters, like OpenAI's model, open up new avenues for rapid development and testing in software design. They allow programmers to quickly iterate on their code, getting immediate feedback and making adjustments on the fly.

Data pipelines, meanwhile, keep the vast data machinery of modern businesses humming along. They ensure that all that valuable data doesn't go to waste, transforming it into actionable insights and aiding strategic decision-making.

Instead of competing, these tools should complement one another, each catering to unique challenges and offering distinct advantages. The key lies in understanding these differences and leveraging each tool effectively. Therefore, while new technologies like OpenAI's Code Interpreter are exciting and impactful, they don't necessarily signal the end for existing technologies like AI data pipelines. In technology, as in nature, evolution often means diversification, not replacement.

Need to build a reliable data pipeline for your business? See how Viable can help.

%20(10).png)

%20(9).png)

%20(8).png)