While surveys may seem simple to build at first glance, they are anything but. If you use multiple choice as your primary method, the survey inevitably becomes long, bias creeps into the leading questions, and you’re left with lackluster insights. If you use something like NPS where you ask a single multiple choice question paired with a comment box, you have the potential for rich, unbiased feedback, but the analysis required to pull insights from it is cumbersome and time-consuming. A combo of both multiple choice and freeform text questions is best, but without a dedicated team to sort through and analyze hundreds or even thousands of rows of feedback, you’ll be left with a lot of data and nothing to show for it.

Our GPT-3 powered AI can analyze both types of data at human-level fidelity, enabling you to streamline your surveys and keep multiple choice questions focused on objective information (i.e. traits and metadata) and use open-form questions for subjective feedback. Our AI can work with any kind of survey data, be it Product Market Fit surveys, market research, NPS, CSAT and more — and spare your team countless hours and resources along the way.

We recently worked with a leading global digital transformation firm to analyze their employee engagement survey. For the purposes of employee confidentiality, we will refer to the company as “Support Inc” throughout this post, but the analysis discussed herein was performed on concrete data from their real-world survey. In it, Support Inc asked their employees a mix of open-ended questions alongside multiple choice. They understood the best way to avoid bias was by opening the floor to their employees, but they also knew they needed to collect key quantitative data, like demographics. Their team did not have the time to do the qualitative analysis necessary for freeform questions, so they began looking for a solution that could go beyond summarization and truly analyze both types of data, together. That’s how they found Viable.

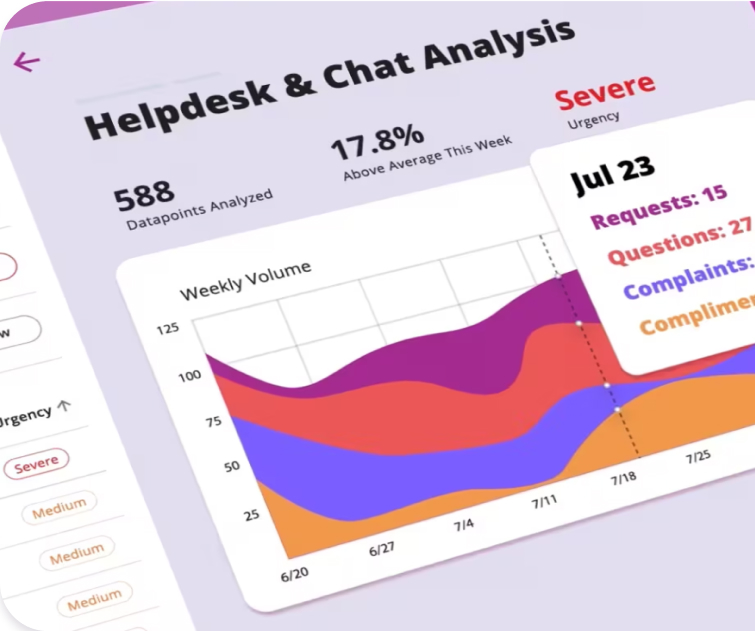

Our AI ingested thousands of rows of qualitative and quantitative survey data and built a report in minutes — a task that would take humans several day. First, it organized their info by question, making it easy to click in and understand the most important themes and recurring responses within each question. It also assigned a level of urgency to each theme based on the language used by the respondents; if an employee is threatening to quit or discussing critical issues such as workplace discrimination, those themes would receive a higher urgency score. Under each question’s section, a natural-language TRL;DR summary is housed at the top that’s so succinct you’ll swear it was written by a human. Below that, you’ll find the sub-themes found in employees’ responses.

Boost customer satisfaction with precise insights

Surface the most urgent topics by telling our AI what matters to you.

Unlike other text analysis tools, our AI treats multiple choice answers as metadata, layering them into the analysis. For the prompt “One thing I would change about Support Inc is…”, the number one response focused on pay not keeping pace with the cost of living. As you scroll down, there’s a snapshot of who responded this way, surfaced with the data from the multiple choice demographic questions in the survey. Interestingly, 65% are female, suggesting a larger issue such as gender wage disparity at play. And while the respondents providing this feedback are unhappy with their pay, the majority do not appear to be a flight risk given 51% of them “feel comfortable, happy and supported at Support Inc”. Having this more nuanced information helps paint a better picture for Support Inc. when considering changing pay structure.

If we knew other details like the geographic location of the Support Inc. respondents, our AI could include that in its analysis as well. This would be particularly useful when considering raises, given how much inflation has impacted cost of living across the U.S., with some cities and states being more affected than others. Armed with that information, our AI could help Support Inc be more precise in determining which geographic areas or departments might warrant a raise more than others.

Unsurprisingly, as you look at the other themes from the survey, pay comes up again to question, “How can we keep you engaged while working remotely for Support Inc?”. Alongside pay, better equipment and communication are also listed. In addition to the qualitative feedback that reinforces this recurring theme, our AI parsed out that 58% of respondents replied that they “strongly disagree” that pay is fair –all of this leading the AI to accurately label this issue as Severe.

At the bottom of the theme analysis, there is a section where you can ask our AI any lingering questions you may have. Since employees listed better equipment as something that will help keep them engaged, we decided to ask “what kind of equipment upgrades do employees want?”. The AI replied, “They want to increase the number of servers so they can handle more traffic.” Knowing this, Support Inc can make better informed decisions on where to invest dollars instead of assuming that employees want new computers or a work-from-home stipend.

The report goes on and contains a wealth of insights that would take the Support Inc team countless hours to synthesize. Amazingly, all of this analysis was done without any human interpretation. Because of the fidelity, Support Inc was able to quickly build an action plan to address employee requests, without sinking hours on the front end to understand their survey feedback. This is only one example of our software's ability to analyze qualitative survey feedback. Our AI can analyze any kind of freeform text from experience surveys to call transcripts, market research, NPS, CSAT and more.

Want to see what our AI can do with your qualitative data?

%20(10).png)

%20(9).png)

%20(8).png)